Abstract

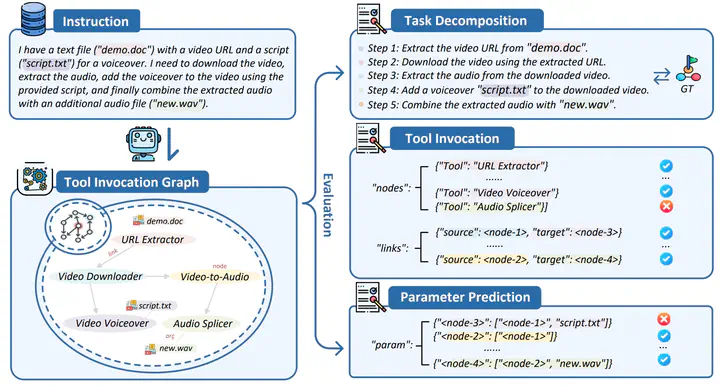

Recently, the incredible progress of large language models (LLMs) has ignited the spark of task automation, which decomposes the complex tasks described by user instructions into sub-tasks, and invokes external tools to execute them, and plays a central role in autonomous agents. However, there lacks a systematic and standardized benchmark to foster the development of LLMs in task automation. To this end, we introduce TASKBENCH to evaluate the capability of LLMs in task automation. Specifically, task automation can be formulated into three critical stages: task decomposition, tool invocation, and parameter prediction to fulfill user intent. This complexity makes data collection and evaluation more challenging compared to common NLP tasks. To generate high-quality evaluation datasets, we introduce the concept of Tool Graph to represent the decomposed tasks in user intent, and adopt a back-instruct method to simulate user instruction and annotations. Furthermore, we propose TASKEVAL to evaluate the capability of LLMs from different aspects, including task decomposition, tool invocation, and parameter prediction. Experimental results demonstrate that TASKBENCH can effectively reflects the capability of LLMs in task automation. Benefiting from the mixture of automated data construction and human verification, TASKBENCH achieves a high consistency compared to the human evaluation, which can be utilized as a comprehensive and faithful benchmark for LLM-based autonomous agents.